How do NVIDIA’s new GeForce RTX 5090 and 5080, released with fanfare regarding their new features and capabilities, perform in real world AI applications?

How do NVIDIA’s new GeForce RTX 5090 and 5080, released with fanfare regarding their new features and capabilities, perform in real world AI applications?

Does the size of a Large Language Model affect relative performance when testing a variety of GPUs?

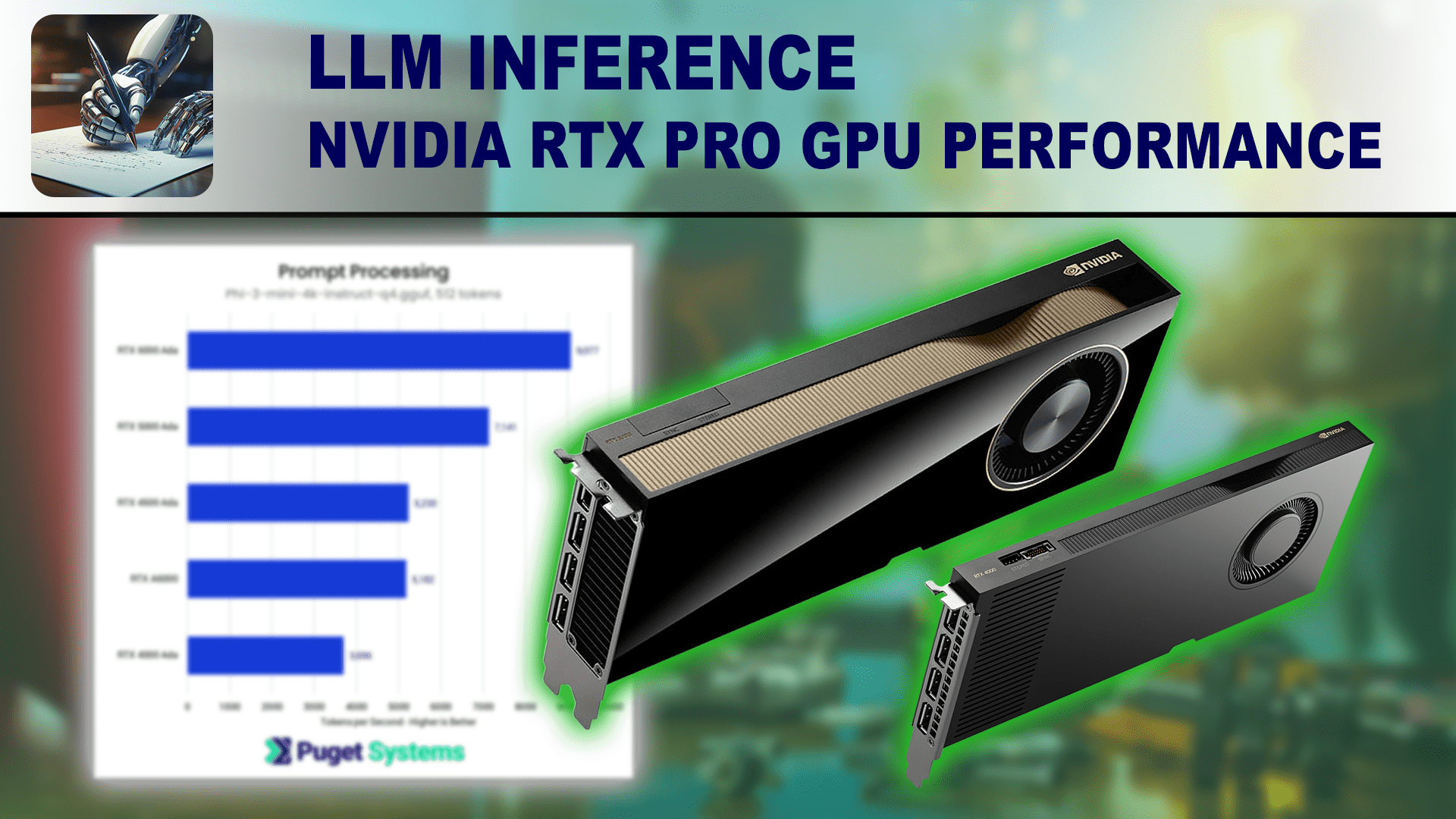

How do a selection of GPUs from NVIDIA’s professional lineup compare to each other in the llama.cpp benchmark?

How do a selection of GPUs from NVIDIA’s GeForce series compare to each other in the llama.cpp benchmark?

What considerations need to be made when starting off running LLMs locally?