Does the size of a Large Language Model affect relative performance when testing a variety of GPUs?

Does the size of a Large Language Model affect relative performance when testing a variety of GPUs?

Our new After Effects benchmark includes a number of 3D-based tests which make heavy use of the GPU. Because of the change compared to previous benchmark versions, we thought it was a good time to do an in-depth analysis of the current GPUs on the market to see how they compare.

How do a selection of GPUs from NVIDIA’s GeForce series compare to each other in the llama.cpp benchmark?

For Topaz Video AI, your choice of GPU can make a major impact on performance. But just what consumer GPU is best? NVIDIA GeForce, AMD Radeon, or Intel Arc?

With the recent overhaul of our DaVinci Resolve benchmark, we thought it was a good time to do an in-depth analysis of the current consumer GPUs on the market to see how they compare and handle multi-GPU scaling in DaVinci Resolve Studio.

Following our recent M3 Max MacBook testing, we used our benchmark database to get performance data for Mac vs. PC for content creation.

NVIDIA has released the SUPER variants of their RTX 4080, 4070 Ti, and 4070 consumer GPUs. How do they compare to their non-SUPER counterparts?

How does performance compare across a variety of consumer-grade GPUs in regard to SDXL LoRA training?

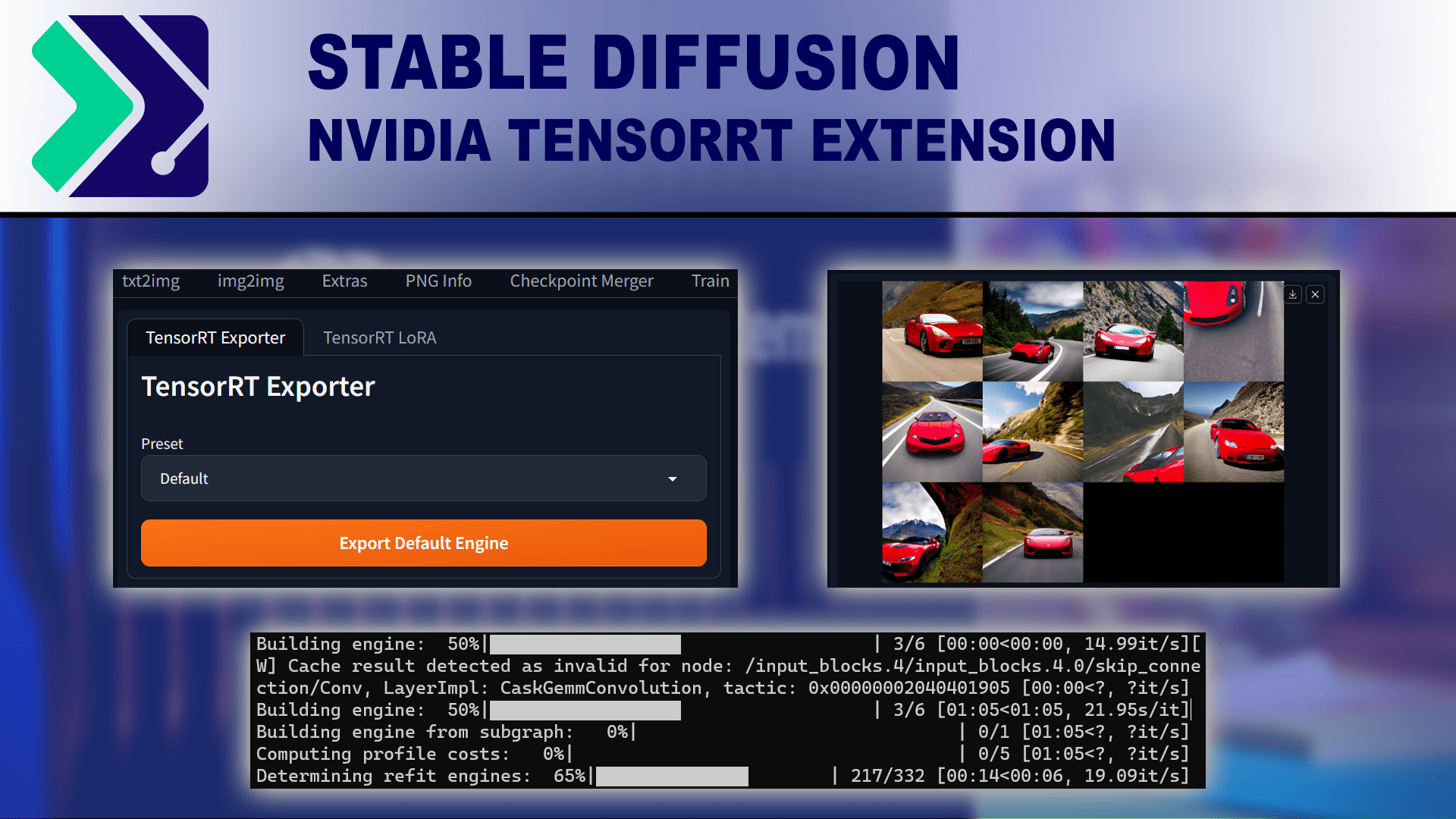

NVIDIA has released a TensorRT extension for Stable Diffusion using Automatic 1111, promising significant performance gains. But does it work as advertised?

Stable Diffusion is seeing more use for professional content creation work. How do NVIDIA GeForce and AMD Radeon cards compare in this workflow?